PART I

CRISIS AND REVOLUTION

| Chapter 1: Midlife Crisis for an Industry

Chapter 2: The Business Revolution of the '90s Chapter 3: Business Revolution, Technical Revolution -- The Client/Server Office of the Future |

9

31 49 |

The three chapters in Part I lay the foundation for the rest of the book. The first chapter describes the current crisis state of the computer industry .At one time that industry was the shining beacon for the industrial world, and certainly for the United States. Led by IBM, the most admired company in the world, dozens of other companies including DEC, Wang, Apple, CDC, and Univac were proof positive that in at least one segment, American companies could innovate and lead. Companies in many different countries began to view the computer industry as a stable foundation on which they could build a strong employment base. Today much of that is changed, and many people wonder where the industry is going.

The turmoil in the industry has profound implications not only for the companies that build computers, but also for the many organizations that use them. Betrayed by the loss of a single, consistent, unwavering vision portrayed by a true blue unimpeachable industry leader, many organizations wonder where that sense of technological direction will come from in the years ahead. Chapter 1 provides the background for understanding how this crisis of substance and confidence came to be.

While the computer crisis has been building, a business revolution of at least equal scope has been developing as well. Originally based on the Total Quality Management movement of the last three decades, Business Process Reengineering is today's tool for determining how managers should structure their organizations. The basic philosophical precepts of both Business Process Reengineering and Total Quality Management call for a fundamentally different way of working -- and thinking -- at individual, departmental, and corporate levels. This new way of working creates a very real need for a new kind of information system based on providing support for distributed, self-managed teams. That's what Chapter 2 is about.

Chapter 3 brings the first two chapters together. It defines the new computer industry that will develop out of today's crisis. That new industry will provide the technology, and eventually the systems, required to support Business Process Reengineering's organizational structures. By the end of Chapter 3 you will have a clear, conceptual picture of the new computer world order that will exist in the years ahead.

![]()

Chapter 1

MIDLIFE CRISIS FOR AN INDUSTRY

Since the 1950s, the computer industry has moved through birth, adolescence, maturity and into a profound midlife crisis. IBM, once the very model of a successful twentieth-century business, is viewed by many as obsolete, yet nobody knows where to look for a new industry leader. Users and vendors of computers are asking themselves profound questions that challenge fundamental beliefs about the computer industry:

| Are mainframes obsolete? Mainframes are supposed to

be obsolete or on the verge of being obsolete.

However, none of the replacement technologies are mature

enough to truly replace the mainframes. True or false? If

true, how do we manage the increasingly expensive,

hard-to-maintain mainframe software base? | |

| Can personal computers run the entire business? Personal computers

can do more than word processing and

spreadsheets, right? So why is it so hard to actually

build personal computer applications that perform broader

functions in the organization? How can a company justify

the increasing cost of maintaining personal computers,

their networks, their servers, and their burgeoning

support staffs? | |

| Are new software tools always better than the old ones? New software development tools are supposedly hundreds of times faster than traditional, COBOL-based techniques. Yet, whenever it comes time to develop or enhance the big, enterprise-wide applications that really run the business, COBOL, |

10

![]()

the COBOL-literate programmers, and all the tools that go with them win the day. Will it be possible to develop applications faster? And how do you satisfy the users who are growing spoiled by the friendly applications they see developed so quickly in the PC world ( even if those applications are small in scope)?

| How do the PC software developers and the mainframe software developers talk to each other? Whom do you believe when one camp talks about disciplined development, operational considerations, and the real world, while the other camp shows us faster development, friendlier interfaces, and lower costs? Is there a way to get the best of both? And do you have to eliminate one set of positions to get there? |

DEFINING MEANING, VALUES, AND IDENTITY FOR COMPUTERS AND THEIR USERS

Until recently, the computer's role in the world was easy to understand. Mainframes provided business solutions. Perhaps they were too expensive for some applications and organizations. However, once the economies of scale were present, IBM or DEC had the system, the strategy, and the solution. Along with the hardware and the software, these companies clearly defined the organizational structures, the professional staff, and even the consultants to use. On the other side of the computer coin, personal computers offered word processing, budgeting, and desktop publishing just as televisions and stereos offered personal entertainment. Both mainframes and PCs were computers, but other than that, they were unrelated. The leaders of the personal computer industry were IBM and Apple -- and the path was simple and unambiguous. During the '60s, '70s, and '80s, computers became cheaper, better, and easier to use every year. To a large extent, along with death and taxes, one of the few constants of the last few decades was the constant progress in the computer world.

Suddenly in the 1990s, computers became confusing: roles have become confused, and progress no longer seems quite as certain. Not just IBM, but DEC, Apple, Compaq, and all the other leaders are being forced to redefine their businesses in almost every way; the alternative is extinction. The boundaries between business computers and personal computers are becoming blurred. All of the beliefs that business people and computer people have about computers are being challenged, too.

This book provides a new framework for understanding how computers will be used in the future. First, this chapter explores the computer crisis of the '90s in more depth so that you can understand the full magnitude of the challenge lying ahead. Because that challenge is so heavily grounded in the history of the computer industry, that's what this chapter is about: the history of computer usage in organizations. Later in the book, I'll look at some of the technical underpinnings of the computer world.

This chapter looks at computers from management, user, and business perspectives. After all, computer technology has become one of the largest costs in organizations.

11

![]()

Also, as Business Process Reengineering reshapes the way companies work, organizations must invest in yet more information technology. So it's important to understand how the people doing all the investing -- the managers and users -- have come to feel about their work and computers. The result of this analysis will be a view of the computer crisis of the '90s, a crisis that is more profound than most people realize.

1900-1949: ELECTRONIC CALCULATORS

During the first half of this century, computers were scientific curiosities used only for complex scientific and engineering calculations. Although they were powerful compared to mechanical calculators, computers of the time were built for specific purposes and were very limited in functionality.

1950s: ELECTRONIC BRAIN

In the 1950s, stored program computers were invented.

By loading instructions into memory, stored program computers

could carry out tasks as varied as the imaginations of the users.

The modern computer age was born. At the beginning of the '50s,

computers were physically enormous, expensive, and very difficult

to program and use. Memory was limited to a few thousand bytes,

and communication with the machine took place through paper

tapes, punched cards, or even handset toggle switches.

Programming languages had not been invented, so even simple

applications took extensive time to develop. During the entire

decade of the 19505, only a few dozen machines were sold, each

costing hundreds of thousands of dollars.

| Decade | Theme | Technology |

| 1950s | Electronic brain | Programming languages |

The single biggest computer achievement of the 1950s was the invention of programming languages. Before that invention occurred, programmers worked strictly with the ones and zeros used by the computer itself. A programming language is a notation -- sometimes similar to English, sometimes mathematical in appearance -- designed to be understandable by humans rather than computers. The whole point of programming languages is to allow people to think in their own terms when working with computers rather than have to constantly deal with the bits and bytes built into the machine. By introducing languages as a high-level tool for programming computers, the '50s made application development truly approachable for the first time. Even 40 years later, COBOL and FORTRAN (introduced in the' 50s) are still two of the most heavily used programming languages in the world.

However, even with stored program capability and programming languages, computers in the '50s were still very limited in function. Permanent storage -- disks and tapes -- had not been invented, so databases were not possible. The primary function of computers in the '50s was still computation. Yet computers made it possible to build large models involving millions of calculations. Those models allowed users to forecast budgets, plan factory schedules, forecast the weather, and even predict elections.

12

![]()

As television was taking off, a computer was used (for the first time in this role) to predict the outcome of the Eisenhower election based on early poll returns. In retrospect, extrapolating early returns involves no wizardry at all. In fact, high-speed communications -- telephones and telegraphs -- played a much larger role in the whole affair than the computer did. Nonetheless, the idea of an electronic brain somehow seeing into the future captured the imagination of millions. Computers, previously mysterious, suddenly appeared understandable. By the end of the 1950s, computers fascinated the public.

1960s: BUSINESS MACHINE

In the '60s, a decade of unbounded hope, computers really

learned to walk. People began to view computers as artificial

intelligences that might well one day replace people in all kinds

of tasks. By the middle of the '60s, computer companies had

realized that the potential marketplace was huge -- much larger

than the few dozen computers that IBM predicted as the market

size in the '50s. With the introduction of permanent storage,

particularly magnetic disks, databases became possible.

| Decade | Theme | Technology |

| 1950s | Electronic brain | Programming languages |

| 1960s | Business machine | Operating systems |

The database, in turn, promoted a vision of a "databased" organization, in which the computer could act as a central coordinator for company-wide activities. In this vision, the database became a key corporate resource. For the first time, people were viewing computers not just as arcane predictive devices. Instead, they saw computers as a true competitive advantage. The idea of information systems for business took shape. In other words, computers became important business machines.

1960s DREAMS

Early in the 1960s, President John F. Kennedy committed the United States to putting a man on the moon by the end of the decade. Technology had moved to center stage. Naturally, computer scientists and others were able to dream just as boldly as anybody else. Driving a great deal of this optimism was a new view of computers. Unlike the devices of the '50s, computers were now able to work with far more than numbers and graphs. In the future, people thought computers might be able to process letters, words, concepts, and potentially even emotions. Words, concepts, and emotions provide a pretty broad palette -- even for computer visionaries. As part of a decade of unbounded hope, the computer contributed its fair share of fond dreams.

When you can conceive of the computer as the first machine that amplifies the brain, why not take a step further? Imagine that same computer eventually replacing the human brain. In fact, why not think of the brain as itself being just a form of chemical computer? Consider some of the ambitious goals that people had set for computers during the '60s.

13

![]()

| Computers would be able to read handwriting, recognize

spoken sentences, translate the results into digital form,

and, most ambitious of all, understand what they

were receiving. | |

| Computers would be able to translate from any human

language (like English, German, or French) to any other

language, taking into account idioms and ambiguities, and

produce better translations than even gifted human

translators. | |

| Computers would be able to play grand master level chess

and world-class Go. | |

| Computers would be able to exhibit learning behavior and carry out most mental tasks that humans do today. |

1960s DISILLUSIONMENT: COMPUTERS CAN'T LEARN (YET)

Most of the predictions listed previously seem just as far away today as they did 30 years ago. Even if programmers can make computers play chess better today, they still don't know how to teach a computer to learn very well. That most basic of human attributes -- the ability to learn -- is still reserved for people, not computers. So the '60s also marked the first big period of disillusionment with computers; they were not electronic brains or replacements for humans, per se.

1960s ACCOMPLISHMENTS: COMPUTERS FOR BUSINESS

Today the computer is the tool par excellence of the (large) organization. Early in the 1960s, people still thought of computers as large calculating machines. Occasionally, advanced thinkers started to think about them as potential artificial intelligences. However, by the end of the decade, a new role was clear: the computer was a superb tool for automating complex business processes.

Computer technology itself played a major role in elevating computers into this humdrum and yet invaluable role inside organizations. All through the '50s and early '60s, computers ran just one application at a time. Ironically, the electronic brains of the '50s were just as personal as today's PCs; it's just that their use was restricted to a few dozen privileged computer scientists worldwide. During the '50s, programming was done sitting at the computer's console, and programs were run during personally reserved time slots; virtually a personal computer in many ways.

The '60s also saw the development of modem operating systems. In fact, three of the four leading big computer operating systems of the '90s, OS/360 (the predecessor of MVS), UNIX, and DOS/360 (unrelated to MS-DOS), all were developed in the '60s. These operating systems allowed the computer to be a shared, constantly available resource. Suddenly, computers became big business: both in the sense of being a big market and in the sense of making big businesses more efficient.

14

![]()

Much of this book describes the ways that computers are now changing the way large organizations operate. In the '60s, rather than changing organizations, computers preserved them. Large organizations that were previously drowning in paperwork suddenly found that computer technology allowed them to preserve their existing structures efficiently. Rather than change existing processes, computers made them faster. In fact, one of the adages of the computer industry, still viewed by some as wisdom today, is that a business process should never be automated until it basically works; don't change the process -- just make it faster.

Although computers in the '60s did not fundamentally change business organizations, they played a major role in keeping those organizations functional as they grew. The 1960s was a decade of multinational corporations, worldwide airline reservations systems, global banking, and worldwide credit cards. Conglomeration and acquisition became a normal route to corporate growth. At the center of all this bigness was the computer. The computer made complex, centrally controlled bureaucracies possible. It mattered little that these bureaucracies simply automated many of the inflexibilities and overheads of the past. Growth for its own sake was the song of the period.

By the end of the 1960s, computers had become truly indispensable for any large organization. Along with big computers and big computer systems, organizations invested massively in computer departments, programmers, professional and support staff, and custom applications software. By 1970, most large organizations were amazed at the amount of money they were spending on information technology (IT). Companies were suddenly spending millions of dollars on what was previously a non-existent budget category .At first, IT line items were classified simply as accounting expenditures, buried somewhere. However, as costs continued to escalate and staffs continued to grow, soon the amounts became too large to be buried. Suddenly, management was spending anywhere from 1/2 percent to 10 percent of its total budget on this newfangled computer stuff.

To put this in perspective, realize that in 1950 no business budget anywhere made any provision for IT expenditures at all; it wasn't possible. Even as recently as 1960, IT expenditures were typically limited to a handful of big companies with specialized needs. Also, in 1960, chances were good that any particularly large IT budget was directly associated with either scientific research or engineering design; the cost could be directly justified in connection with development of new products. By 1970, though, every large company was spending a significant part of its budget on computers. And, at last, by 1970, it appeared that most companies had built most of the applications that made sense. Expensive, probably worth it, and finally containable in cost -- what a relief.

1970s: THE DATABASED CORPORATION

After finally reaching an apparent plateau, the entire computer world changed between 1968 and 1972. Databases, terminals, networks, and permanent storage (disks) all became practical at about the same time. The result of this new technology

15

![]()

was to cause almost all existing applications to become

obsolete and to create a need for a whole class of new

applications. These applications would collectively dwarf

everything that had come before in terms of cost and complexity.

| Decade | Theme | Technology |

| 1950s | Electronic brain | Programming languages |

| 1960s | Business machine | Operating systems |

| 1970s | Databased corporation | Databases, terminals, and networks |

Until the database revolution that ushered in the 1970s, many senior managers had a hard time fully understanding the potential of computers. At first they viewed computers just as part of the administrative accounting systems. Later, when IT costs continued to grow, management felt uncomfortable, but many decided to ignore it. Databases, terminals, and networks changed all that.

In every large organization, isolation is a fundamental fear. The bread and butter of every business school and business magazine are stories about senior management teams that lose touch with their markets and customers because they can't get access to accurate information in a timely fashion. All of a sudden, the computer promised to fix that isolation problem.

In the early '70s, the concepts associated with on-line databases -- databases connected to and fed by networked terminals -- suddenly were being described in the Harvard Business Review, not just in technical magazines read only by computer professionals, but in business magazines normally read only by executives. Management could arrange to eliminate all isolation by

| Viewing information as a key corporate resource | |

| Building a totally consistent, company-wide, integrated

database | |

| Ensuring that information was entered into and updated in that database as soon as that information entered the company |

Sales information, customer complaints, up-to-date costs, inventory, and cash levels -- you could view all of these at the touch of a keyboard in accurate and consistent form. Just build an on-line database, create a company-wide communications network, and put terminals everywhere, and the dream becomes reality. After all, compared to putting a man on the moon or making computers play chess as well as grand masters, how hard can building the total corporate database be?

The '70s were a remarkable decade from a management and expenditure perspective. In just 20 years, from 1950 to 1970, companies that didn't even have budget categories for IT built entire IT organizations and budgets that were on the verge of being unsupportable. Yet, by the beginning of the '70s, virtually all of those same

16

![]()

companies, after reading the Harvard Business Review and similar publications, committed themselves to computer expenditures yet again, many times higher than before. That's what it takes to build a totally wired, database-centered organization.

How much should an organization spend on information technology? In 1970, very few people asked this question; by 1980, everybody wanted to know. As the computer industry developed into a major industrial, economic sector in its own right, a legion of consultants and academics analyzed spending patterns to answer the question. By the late '70s, a rough rule of thumb had emerged: organizations should target spending around 1 percent of their expense budgets on IT .

Keep this 1 percent number in the right context. The number is averaged across a hugely diverse range of companies. Therefore, like all averages, the 1 percent rule applied loosely to everyone and strictly to no one. For example, manufacturing companies really should have been spending around 1 percent of their budgets on computer expenditures if industry averages were any indication at all. However, banks, investment brokers, and insurance companies should have been spending around 10 percent of their (noninterest expense) budgets on information technology. Information is their product. At the other extreme, retail organizations had razor-thin margins and needed to keep IT costs closer to 1/2 percent of budgets.

The '70s were marked by two key trends in management's understanding of computers:

- The executive team learned how computer and on-line

databases could make their enterprises more competitive.

In particular, senior managers saw computer-based

information systems as tools for keeping the top of the

company in touch with customers and daily trends, and

eliminating the dreaded isolation from reality.

- Management realized that building a modern on-line organization was hugely expensive. Yet by about 1980, management believed that they had rules of thumb for appropriate expenditure levels and a broad base of experience on how to build modem information systems in a controlled fashion.

Most of all, the 1970s produced a vision for the future: information is instantly available, is always up-to-date, is organized in a consistent database that allows a company to operate faster, and enables everybody in the organization to stay in touch with the real world. A fine vision to keep in front of us.

1980s: THE BEGINNING OF THE END AND THE END OF THE BEGINNING

The '80s were a profoundly schizophrenic period for both the computer industry and its customers. On one hand, mainframe-based systems and the approaches to building them were finally becoming mature and predictable. Many organizations could begin to think of a future in which most of the applications required to run the

17

![]()

business would be in place around a coherent, shared database. So in the safe, comfortable mainframe world, management in the '80s felt that the beginning of the end, in terms of meeting users' needs, was in sight. On the other hand, that was the same decade in which the personal computer turned the entire computing world on its ear. By the end of the 1980s, personal computers had totally changed users' expectations and created a demand for a completely new class of applications and computer systems. In the personal computer world, the decade was not the beginning of the end, but the end of the beginning. The end of the beginning? Yes. By 1989, even mainframers had to admit that personal computers were here to stay, and at the same time, personal computer bigots realized that those same personal computers needed to grow up and play a role in running the business. Making users more productive was not enough; the PCs had to produce a bottom-line return. The next two sections of this chapter examine these two opposing views of the '80s in more detail and show how they eventually came together to create the situation driving the computer industry in the '90s.

1980s, TAKE ONE: AN ADULT INDUSTRY -- SOFTWARE ENGINEERING FOR MAINFRAMES

The 1980s were a period of profound contradiction. First, the

personal computer industry was working through its infancy; I'll

get to that in the next section. Second, the conventional,

run-the-business mainframe industry was dealing with a serious

midlife crisis at the same time that technology itself was

largely standing still.

| Decade | Theme | Technology |

| 1950s | Electronic brain | Programming languages |

| 1960s | Business machine | Operating systems |

| 1970s | Databased corporation | Databases, terminals, and networks |

| 1980s | Software engineering | CASE, methodology |

Standing still? What about minicomputers, packet switching, computer-aided software engineering (CASE), and so on? Yes, there was continuing ferment and change in the big computer industry. Computers continued to get better at a pace truly frightening to anyone charged with managing them. At the same time, large computer technology at the end of the 1980s was very similar to the technology at the beginning of the same decade: faster, cheaper, more widely available, with lots of new features, but basically unchanged qualitatively. For example, a mainframe application built in 1980 looked virtually identical to one built in 1990; unchanged in all ways that matter to users. By 1990, terminals had become commodities costing a few hundred dollars. The relational database model had become dominant. Software development tools had changed enough to be almost completely different than they were ten years ago. Still, if you were to take the largest applications run by most organizations and compare both the applications and the infrastructure supporting them over the ten-year period,

18

![]()

you would find surprisingly little change. Even the acronyms hardly changed: MVS, IMS, CICS, VMS, COBOL, DB2, SNA, DECnet, MPE, Guardian, JES, IDMS, and on and on. So what changed?

In 1970, business had big dreams: on-line corporations, wired desktops, totally consistent databases. By 1980, the reality had set in: the vision was far harder to implement than anyone had imagined. However, rather than back off, the industry hunkered down and developed the necessary tools, disciplines, and methodologies required to make possible the big systems required by the dream. Not just possible, but predictably possible. Management was willing to accept big budgets and long delivery schedules, but only if the promised applications would actually arrive on schedule and in good working order.

By 1980, the computer industry was beginning to mature. In March 1979, the Harvard Business Review published a milestone article by Richard Nolan. That article (following the work of an earlier HBR article written in 1974 by Nolan and a colleague, Cyrus Gibson) documented the stages most organizations go through to build increasingly sophisticated computer systems company-wide. In a sense, Nolan, discussing stages of growth and maturation, was like the Dr. Spock of computer development. Just as a generation of American parents learned about the stages of child development from Spock, a generation of business and computer managers learned to recognize computer organizational maturation from Richard Nolan.

Technically, the industry was not really standing still. True, big computers, their databases, and related development tools had reached a plateau with little qualitative change. However, the focus and energy of the big-computer industry shifted from the computers and databases to the process of designing and building applications. Computers were here to stay. Management was even excited about them. But the new question was, how do you build applications for those computers in some kind of disciplined fashion?

In many ways, the changes taking place during the early '80s in the mainframe world were very similar to the changes taking place now in the personal computer and client/server world. First, technology revolving around databases, networks, and terminals offered substantial business benefits. Second, knowing how to predictably and reliably build large applications to take advantage of this technology was a problem. Third, system development professionals gradually learned how to make this technology work in a trustworthy fashion.

So the 1980s were a period when business focused on converting application development from an art to an engineering discipline. This conversion process yielded two highly visible outcomes: methodology and CASE.

19

![]()

METHODOLOGY

A methodology is a formal prescription, a road map describing how software should be built. Methodologies range from loose frameworks that describe basic design approaches to 60-book encyclopedias that define every step, every hour and minute, and every organizational function required to build an application. As you would expect, an industry desperate for predictability and cost control grabbed at methodology as a saving grace, and the result was partly a huge religious fad. However, at the core of each methodology was a fundamental insight: software design is a formal process, one that can be studied and improved. Much of this book is devoted to laying out a framework for the next generation of design methodology, one that supports development of distributed applications.

COMPUTER-AIDED SOFTWARE ENGINEERING

Along with the development of methodologies (and there were many of them) came the software tools to support both the methodologies and the design process: CASE (computer-aided software engineering). Why not use computers to help write programs? If software design is to become an engineering discipline, why not have computer-aided software engineering tools? And thus was born a billion-dollar industry.

Consulting firms developed, taught, and marketed methodologies all over the world. CASE vendors offered tools to automate both design and the associated methodologies. And, finally, system integrators offered the ultimate in predictability: they would guarantee to build applications within a budget, transferring any risk of overrun from user to integrator. So by 1990, CASE became a billion dollar industry segment devoted to building better applications more predictably.

Methodologies, CASE tools, and system integrators do not represent absolute guarantees of perfect systems always finished on time; nonetheless, the big-computer industry reached maturity at the end of the 1980s. MIS organizations do know how to build big systems. Methodologies do prescribe ways to build high-quality systems. CASE tools do support analysts, designers, and developers in building bigger systems better. And for those projects that warrant it, system integrators do offer reliable application delivery within fixed budgets. MIS, vendors, and the industry as a whole had every reason to feel proud of the state of the art by 1990. However, while the industry was growing up, the world around them was changing completely. To see this, revisit the '80s from the second perspective, that of the personal computer.

1980s, TAKE TWO: PERSONAL COMPUTERS -- ARE THEY TOYS OR APPLIANCES?

The 1980s marked the decade of the personal computer. By 1990, there were 30 thousand mainframes in the world -- the largest population of mainframes you're ever likely to see. In that same year, there were over 30 million personal computers.

20

![]()

| Decade | Theme | Technology |

| 1950s | Electronic brain | Programming languages |

| 1960s | Business machine | Operating systems |

| 1970s | Databased corporation | Databases, terminals, and networks |

| 1980s | Software engineering | CASE, methodology |

| Personal computing | Personal computers, graphical

interfaces, and local-area networks |

In the '80s, the personal computer industry went through the same kind of birth process that mainframes went through in the 1950s. For the first half of the decade, management and MIS were convinced that personal computers were just a passing fad. After all, calculators were a big deal in the 1970s, and although they didn't disappear, they hardly had a profound effect on any company either fiscally or organizationally. So why should the next electronic wave be any different? In fact, why not think of personal computers as just bigger, better calculators? Wait long enough and PCs would also become cheap, dispensable, and not worth thinking about anymore.

However, by 1985 it had become clear that personal computers were here to stay, and in a way different from calculators. Rather than personal computers becoming cheaper, users were making them more expensive with their insatiable need for more power, more storage, and more display resolution. Even peripherals were evolving. As dot-matrix printers became inexpensive, users discovered laser printers. As fast as disk price per byte dropped, users developed a need for many more bytes. So not only were personal computers here to stay, but costs were going up, not down. Even in the face of rapidly improving PC technology, users' appetites for ever more power was generating such a healthy demand that personal computer expenditures continued to increase faster than computer prices were falling. Still, even in 1985, nobody imagined that personal computer expenditures would ever catch up with -- let alone exceed -- mainframe costs. However, it was clear that personal computers were changing users' expectations.

Until the second half of the '80s, most individuals had only limited access to computers. Even if they had a terminal on their desk, that terminal was typically used only to run applications developed and maintained by the central IT organization. True, some engineers and scientists had regular access to timesharing systems with new tools like word processors and other personal tools -- but they were a tiny minority. And although dedicated word processing systems from Wang, NBI, and others were starting to show a new way of using computers, those word processors really weren't computers. They were just expensive appliances, like copiers -- so they, too, were ignored.

By 1985, all that comfortable smugness was starting to change. Several million users had been exposed to Multimate, VisiCalc, 1-2-3, dBASE, and WordStar. While addressing special needs, these applications were also dramatically easier to learn and

21

![]()

use than mainframe applications. In particular, VisiCalc, 1-2-3, and dBASE were starting to make users think in new ways -those tools could be used to build custom applications:

| Why wait for MIS to develop a sales forecasting system

when your local hacker can build the same system in

three days at his desk? | |

| Why wait, particularly when the system developed in three

days will be easier to use, more flexible, and will

run on cheaper hardware? | |

| And, most of all, why wait when the MlS system will take two years, cost tens of thousands of dollars, and then be too expensive to run anyway? |

Of course, the applications that could be developed with 1-2-3 and dBASE were highly limited in nature: they never dealt with shared information and were not capable of handling large amounts of data. So the threat to MlS, while starting to be visible, was still clearly very limited in scope.

Still, around 1985 computer professionals started taking PCs seriously for the first time -- and when they did, they didn't like what they saw. The first dislike stems from a basic cultural division between MIS staffs and PC users that still exists today. This division is responsible for many of the problems that must be solved in the '90s as users move to the next plateau of computing. Simply put, MlS concluded that all those millions of personal computers were fancy toys or sophisticated appliances. Remember that the MIS community was wrestling with all the issues of building serious applications in a predictable fashion. From that perspective, PCs lacked all of the infrastructure so painfully built up in the mainframe and minicomputer world over a 20-year period. For example, PCs lacked all of the sophisticated timesharing and transactionally-oriented operating systems, all of the industrial-strength databases, all of the batch-scheduling facilities, and all of the CASE tools and modem programming languages.

So the MIS community woke up around 1985, poked and sniffed at the personal computer revolution, decided it was irrelevant to everything they were doing, and went back to sleep. And the worst of it is that they were right. Personal computers in 1985 could not be used to build run-the-business applications, no way, no how. And because those large business applications still needed to be built and run, it's hard to see how the big computer community could have reacted much differently. There just aren't enough hours in the day to live twice.

The result of that disregard is the cultural split we all live with today. Because the MIS computer professionals found early personal computers inadequate, a new breed of computer professional grew up during the '80s. Yesterday's teenage hackers, dBASE, 1-2-3, BASIC, and C programmers have become today's client/server gurus. Yesterday's basement developers have become today's personal computer system integrators. And yesterday's computer club president has become today's network

22

![]()

computer manager. A completely separate personal computer profession has grown up to parallel the MJS computer profession. And integrating those two professions -- with different value systems, different technical backgrounds, and different beliefs -- one of the challenges of the '90s.

1990s: CLIENT /SERVER AND DISTRIBUTED COMPUTING

The technical results of personal computer technology are

clear and obvious: graphical user interfaces that are easy to

use, virtually unlimited access to computer power on individual

desks, and a new way of thinking about computers and the

applications that run on them. What's not so obvious are the

business implications of PCs. The question management thought had

been answered in 1980 came back with renewed force in the 1990s: How

much is the right amount to spend on information technology? This

time the question is far more serious. To see why, consider both

the personal computer revolution and mainframe costs while

all those personal computers were being bought.

| Decade | Theme | Technology |

| 1950s | Electronic brain | Programming languages |

| 1960s | Business machine | Operating systems |

| 1970s | Databased corporation | Databases, terminals, and networks |

| 1980s | Software engineering | CASE, methodology |

| Personal computing | Personal computers, graphical

interfaces, and local-area networks |

|

| 1990s | Client/server | Distributed computing |

OFFICE AUTOMATION

At least today, personal computers are office machines. The personal computer industry is based on concepts of office automation first made popular in the '70s. At that time, American management was just starting to be concerned about global competition. Investment was a much-discussed topic: how much should organizations be investing in the future? Along with investment, another concern was overhead, particularly in the form of bureaucracy. At the time, one popular theory was that office workers, the ones who populate that bureaucracy, didn't have enough support. Why not invest in the office just like investing in the factory? According to this theory, a factory is a plant for processing physical goods, and an office is a plant for processing information. Given this model, why not invest in equipment to help office workers, now called information workers, to process that information more efficiently? Hence, the concept of office automation was born.

23

![]()

Today, office automation is usually associated with dreams of paperless offices: ad ministrative systems in which all forms, reports, and other paper have been replaced by magical computer screens. Although this is in fact a goal of office automation, it is much more important to remember the other and original goals:

| Increase the efficiency of the office worker. | |

| Make administrative processes cheaper and faster. | |

| Reduce overhead cost tremendously. |

In many ways, personal computers both helped and hurt office automation. They helped office automation because they made easy-to-use computers truly inexpensive, landing the machines on many more desks more quickly than even the most ambitious office automation prophets ever predicted. Yet personal computers hurt office automation because they demonstrated that the goal of eliminating paper, sim plifying administrative processes, and changing the office takes far more than just technology. Today's office has more paper than ever. Laser printers, desktop publishing, spreadsheets, and electronic mail systems generate more paper faster than ever. Thus in the early '80s, the first international Office Automation Conference took place, and by the mid-'80s, the last one ever marked the end of the office automation dream in its late-'70s, technology-driven form.

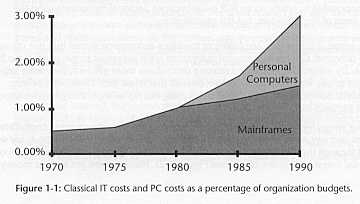

What was happening to mainframe computer costs while personal computers were creeping onto desks allover the world? First, classical IT costs continued to increase. That average cost of 1 percent had increased to 1.5 percent of corporate budgets by 1990. Information-intensive companies -- the banks and insurance companies -- were spending around 15 percent of their budgets on computers. Worse, this 50 per cent budgetary increase, because it's expressed as a fraction of the total budget, is automatically adjusted for inflation (see Figure 1-1 ). So the cost of growing the main frame or minicomputer system that ran the business grew by well over 50 percent through the 1980s. Bad enough.

24

![]()

At the same time, expenditures on personal computers had grown to be fully equal to expenditures on MIS by the end of the 1980s. So while the average company was spending about 1.5 percent of its budget on mainframe applications, the same company was spending another 1.5 percent of its budget on personal computers. Therefore, the total IT budget, including classical IT and personal computers, had increased from around 1 percent to around 3 percent. Even worse, banks and insurance companies were spending about 25 percent to 35 percent of their noninterest budgets on computers -- the largest single noninterest cost in the entire company. So IT expenditures by the end of the '805 had tripled. Most companies had reached the point where they were simply spending more than they could afford on computers.

DRAWING THE LINE

The 1990s can best be characterized as the decade in which management finally decided that they couldn't take the mounting IT costs anymore. Something had to give. The simplest way to understand the dilemma is to consider mainframes and personal computers in their worst light:

| Mainframe systems run the business. However, they are

hugely expensive, hard to use, inflexible, and

time-consuming to develop. Still, the

systems definitely pay their own way; after all, they

run the business. Every transaction, every piece of work

done on a mainframe can be, and is, justified on

an ROI (return on investment, the classical

management measure of investment justification) basis; new

mainframe applications don't get built unless

there's a solid business reason for doing so. | |

| Personal computers make individuals more productive. They're easy to use, and application development is relatively quick and flexible. The problem is that personal computers can't be used to run the business. Although individual personal computers are inexpensive, in the aggregate they cost as much as mainframes. They require support, network connections and servers, and a variety of other infrastructures. Worst of all, the costs are virtually impossible to justify on an ROI basis because personal computers meet the needs of the individual, not the organization. |

In a sense, it is precisely the failure of the office automation dream that has created this dilemma. Originally, personal computers were supposed to run parts of the business. By eliminating paper and making procedures more efficient, they were supposed to pay for themselves. The problem is that it just didn't turn out that way. Personal computers yielded personal benefits, but not in ways that generate a measurable payback.

In the early 1990s, the Bureau of Labor Statistics studied office worker capital investment and any associated productivity increase. The startling result of the study was that through the '80s, American companies did steadily build a significant level of capital investment in the office (see Figure 1-2). Management put serious money into

25

![]()

building the information factory of the future. Personal computers were a large part of that investment. During that same period of time, office worker productivity remained virtually unchanged. How could that be?

A PAINFUL QUESTION

Personal computers are supposed to make individuals more productive; personal productivity is what computers are all about, right? Think about all the people you know who use desktop machines for various projects. Writing with a word processor is certainly better than writing with a pen or typewriter, and preparing a budget or sales forecast is infinitely easier with a spreadsheet than with a calculator. But is the user of that word processor or spreadsheet actually more productive? Does he or she get more work done in less time? Consider the lowly office memo. I used to dash off a quick note by hand or dictation machine. For short notes, I just sent the handwritten version along; for more elaborate communications, a typewritten version was prepared and distributed. Because the process was so painful, memos were kept short and simple. Similarly, budgets and forecasts, limited by the awkwardness of hand calculation, were always reduced to minimal state, too.

With personal computers, simplicity with work went out the door. In theory, you could produce the same simple typewritten memo, using a word processor, and send it out in less time. Instead, the memo grew in length, graduated to multi column format, and gained multiple fonts, embedded graphics, and elegant formatting. Budgets became even more sophisticated. Spreadsheets made it possible for even the most elementary business proposition to be dressed up to illustrate multiple scenarios, complex alternatives, and masses of detail. Forecasts with dozens of formulas became the

26

![]()

exception, not the rule. So instead of doing the same work in less time, or more work in the same amount of time, so-called information workers found themselves doing more work in more time. And worst of all, work on personal computers fell into categories not directly related to bottom-line return on investment.

A PAINFUL ANSWER

Does a somewhat better justification for a project make a business more competitive? How about a slightly more elaborate sales forecast or a dressed-up progress report? The problem with most of the work done on personal computers is that much of this work is only indirectly related to the line functions that keep the business going. In effect, the personal computers acquired during the '80s packed a double whammy at the organization and the IT budget. In the first place, those computers made their users only marginally more productive. And even where PCs have led to more productivity, the gains are applied in ways that yield only indirect return on investment -- ways that don't show up on the bottom line.

Finally, while apparently not contributing to the bottom line, personal computers did spoil users. Personal computer users, now accustomed to windows-based, mouse-driven graphical applications, find mainframe applications painfully hard to learn and use. As users run those mainframe applications on the personal computer screen using terminal-emulation software, the discrepancy becomes particularly painful. The user gets to see the clunky order-entry form right there on the very same screen sit- ting next to the neat, attractive, powerful spreadsheet. Here is perhaps the ultimate irony: not only have personal computers not provided a return on capital investment, but worse, they make their users not want to use the other computers that do provide a return.

During the 1990s, organizations must adopt a completely new perspective on the use of computers. In the aggregate, computer costs are out of control. The mainframes and their databases run the business, and that function must be carried forward. However, you can't afford to keep paying for those mainframes while also paying for a completely parallel infrastructure centered around personal computers. The personal computers are too expensive, as well. PCs now cost as much as the mainframes, but serve personal needs only. As things stand today, senior management can't get rid of either mainframes or personal computers. The mainframes run the business, and the personal computers have come to be viewed as entitlements (and you know how hard it is to take entitlements away) by users everywhere. There's the dilemma. What we need is a breakthrough. The first impulse is to look on the technical front. However, the answer isn't there; it's on the business front. The next section tells why.

TUNING, DOWNSIZING, RIGHTSIZING, DOWNSIZING AFTER ALL

What is the best way to understand the technical and business revolution of the '90s? The tables in the following four sections frame this question by placing technology in the vertical axis and business practices in the horizontal axis.

27

![]()

TUNING

Faced with rapid changes in both technology and business

organization, most companies start out by doing as little as

possible (see Table 1-1). In other words, they tune the existing

systems. This is not enough.

Table 1.1 Tuning Existing Systems

| Same Business Model | New Business Model | |

| New Technology | ||

| Same Technology | Tuning |

DOWNSIZING

Downsizing is another approach. If mainframes and personal

computers are too expensive, why not get rid of one? After all,

it's common knowledge that PCs are becoming as powerful as

mainframes were just a few years ago. Why not just replace all of

the mainframes with a few networked personal computers? In simple

terms, this is the core idea behind the computer downsizing movement

of the early '90s (see Table 1-2).

Table 1.2 Downsizing Systems

| Same Business Model | New Business Model | |

| New Technology | Downsizing | |

| Same Technology | Tuning |

The problem is that downsizing carried out in a simple-minded way just doesn't work. Mainframe applications are generally very complex and depend a great deal on the sophisticated operating systems and databases that are part of the mainframe environment. To make downsizing really work, you must find some way to convert those applications to run on personal computers that mayor may not be networked. The process better be a conversion instead of a rewrite -- because rewriting those applications will typically cost as much as any savings generated by the move. Besides, re-writing applications takes a long time, but organizations don't stand still that long. Nobody can afford to wait through an entire rewrite for potential cost savings. So to make simple downsizing work, you would need a conversion -- and a fast one at that.

Unfortunately, large, sophisticated, mainframe-based applications cannot be converted to run on personal computers. There are many technical reasons for this: dependence on databases, TP monitors, terminal orientation, and so on. Mainframe and

28

![]()

network/PC system architectures are very different. An application originally written for one system needs to be fundamentally rewritten to run well in the other environment. All of this means that there is no simple way to eliminate the cost of both main. frame and personal computer infrastructures.

RIGHTSIZING

Many industry pundits decided that if large-scale downsizing doesn't work, some variation of downsizing must still be practical. Instead of downsizing, simply rightsize (see Table 1-3). Aside from any technical and business merits of the approach, the term rightsizing has a certain emotional appeal. Downsizing, after all, carries with it connotations of shrinking companies, smaller workforces, and large-scale layoffs. Rightsizing, on the other hand, starts out with none of this negative emotional baggage.

Table 1.3 Rightsizing Systems

| Same Business Model | New Business Model | |

| New Technology | Rightsizing | |

| Same Technology | Tuning |

So what is rightsizing? Rightsizing is a euphemism for the phrase personal computers are really toys. The reasoning behind the term rightsizing goes something like this:

| Everybody knows that straightforward conversion of big,

mainframe-based applications isn't practical. | |

| Everybody (whoever "everybody" is) knows that

those big applications that run the business can't be made

to run on PC systems. The fact that the real applications

can't be converted is just a symptom of a larger problem:

PCs don't have the performance, the operating systems, or

the overall throughput to run the big, serious

applications. | |

| There has to be some way to gain some more return on

investment from PCs. | |

| PCs should be reserved for smaller applications. After all, those smaller applications just clutter up the mainframe anyway: they're a nuisance to write, a bother to keep running, their users generate pesky demands, and now there's a nice smaller home they can live in. |

In effect, rightsizing is a euphemism for moving toy applications to toy machines and leaving the real machines to run the real applications. The strategy also won't work. It won't work because having the mainframes and PCs is too expensive, and because PC systems are much more powerful than is being recognized. But most of all, a business

29

![]()

revolution is in the works that calls for a new approach to building systems... period. The new approach does provide the justification for rewriting the applications that run the business. If converting is too complex, don't convert. If the cost of a rewrite is too frightening, just wait. Soon the reasons and financial justifications for that rewrite will present themselves.

DOWNSIZING AFTER ALL

After playing through all the variations on downsizing and rightsizing computer systems, some organizations settle on yet another choice: ignore the technology and the computer systems altogether (see Table 1-4). After all, if those computer systems are so stubbornly hard to downsize and if rightsizing doesn't yield enough benefits, maybe the solution is to ignore those pesky computers. Focus on the business. Reengineer the processes. And, somehow, when the business runs more effectively, either the computer problems will magically go away or profitability will increase so much that you can ignore the computer problems anyway. Sounds fine, but also doesn't work.

Table 1.4 Business Process Reengineering without Computer System Downsizing

| Same Business Model | New Business Model | |

| New Technology | Downsizing | |

| Same Technology | Tuning | BPR |

As you'll see in the next chapter, Business Process Reengineering (BPR) actually depends heavily on new information technology. In fact, the whole point of reengineering is to use computers to redesign the organization. A reengineered corporation provides the justification required for rewriting all those existing mainframe applications. Besides, rewriting those mainframe applications will help the organization meet the new requirements that reengineering calls for anyway. Ultimately, reengineering will yield a distributed computer system that combines the strengths of mainframes and personal computers. The new distributed system will bolster the reengineered processes, strengthen self-managed teams, and empower employees, as I discuss in the next chapter.

As the final form of the matrix shows, downsizing is driven by Business Process Reengineering (see Table 1-5). Conversion isn't possible. Mainframe applications won't run largely unchanged on PCs, networked or not. But properly rewritten, the applications required to run even very large organizations can run on networked personal computers.

30

![]()

Table 1.5 Business Process Reengineering WITH Computer System Downsizing

| Same Business Model | New Business Model | |

| New Technology | Downsizing | Breakthrough |

| Same Technology | Tuning | BPR |

The resulting systems will have all the performance, throughput, and sophistication required in a real business environment. True, the applications will be written in a completely new way. But once written, they exhibit flexibility, scalability, and friendliness unheard of in today's big application world. The key is realizing that technology forces will not make all this happen. Instead, new business forces will make those costs incredibly affordable. The next chapter looks at the business forces that can drive this change.