To search the site, try Edit | Find in page [Ctrl + f]. Enter a word or phrase in the dialogue box, e.g. "parameter" or "probability" If the first appearance of the word/phrase is not what you are looking for, try Find Next.

Topics in Statistical Data Analysis

![]()

Binomial , Multinomial , Hypergeometric , Geometric , Pascal , Negative Binomial , Poisson , Normal , Gamma , Exponential , Beta , Uniform , Log-normal , Rayleigh , Cauchy , Chi-square,

Weibull , Extreme value , t distributions .

Common Discrete Probability Functions

P-values for the Popular Distributions

![]()

Why Is Every Thing Priced One Penny Off the Dollar?

A Short History of Probability and Statistics

Different Schools of Thought in Statistics

Bayesian, Frequentist, and Classical Methods

Rumor, Belief, Opinion, and Fact

What is Statistical Data Analysis? Data are not Information!

Data Processing: Coding, Typing, and Editing

Type of Data and Levels of Measurement

Variance of Nonlinear Random Functions

Visualization of Statistics: Analytic-Geometry & Statistics

What Is a Geometric Mean?

What Is Central Limit Theorem?

What Is a Sampling Distribution?

Outlier Removal

Least Squares Models

Least Median of Squares Models

What Is Sufficiency?

You Must Look at Your Scattergrams!

Power of a Test

ANOVA: Analysis of Variance

Orthogonal Contrasts of Means in ANOVA

Problems with Stepwise Variable Selection

An Alternative Approach for Estimating a Regression Line

Multivariate Data Analysis

The Meaning and Interpretation of P-values (what the data say?)

What is the Effect Size?

What is the Benford's Law? What About the Zipf's Law?

Bias Reduction Techniques

Area under Standard Normal Curve

Number of Class Interval in Histogram

Structural Equation Modeling

Econometrics and Time Series Models

Tri-linear Coordinates Triangle

Internal and Inter-rater Reliability

When to Use Nonparametric Technique?

Analysis of Incomplete Data

Interactions in ANOVA and Regression Analysis

Control Charts, and the CUSUM

The Six-Sigma Quality

Repeatability and Reproducibility

Statistical Instrument, Grab Sampling, and Passive Sampling Techniques

Distance Sampling

Bayes and Empirical Bayes Methods

Markovian & Memory Theory

Likelihood Methods

Accuracy, Precision, Robustness, and Quality

Influence Function and Its Applications

What is Imprecise Probability?

What is a Meta-Analysis?

Industrial Data Modeling

Prediction Interval

Fitting Data to a Broken Line

How to determine if Two Regression Lines Are Parallel?

Constrained Regression Model

Semiparametric and Non-parametric Modeling

Moderation and Mediation

Discriminant and Classification

Index of Similarity in Classification

Generalized Linear and Logistic Models

Survival Analysis

Association Among Nominal Variables

Spearman's Correlation, and Kendall's tau Application

Repeated Measures and Longitudinal Data

Spatial Data Analysis

Boundary Line Analysis

Geostatistics Modeling

Data Mining and Knowledge Discovery

Neural Networks Applications

Information Theory

Incidence and Prevalence Rates

Software Selection

Box-Cox Power Transformation

Multiple Comparison Tests

Antedependent Modeling for Repeated Measurements

Split-half Analysis

Sequential Acceptance Sampling

Local Influence

Variogram Analysis

Credit Scoring: Consumer Credit Assessment

Components of the Interest Rates

Partial Least Squares

Growth Curve Modeling

Saturated Model & Saturated Log Likelihood

Pattern recognition and Classification

What is Biostatistics?

Evidential Statistics

Statistical Forensic Applications

What Is a Systematic Review?

What Is the Black-Sholes Model?

What Is a Classification Tree?

What Is a Regression Tree?

Cluster Analysis for Correlated Variables

Capture-Recapture Methods

Tchebysheff Inequality and Its Improvements

Frechet Bounds for Dependent Random Variables

Statistical Data Analysis in Criminal Justice

What is Intelligent Numerical Computation?

Software Engineering by Project Management

Chi-Square Analysis for Categorical Grouped Data

Cohen's Kappa: Measures of Data Consistency

Modeling Dependent Categorical Data

The Deming Paradigm

Reliability & Repairable System

Computation of Standard Scores

Quality Function Deployment (QFD)

Event History Analysis

Kinds of Lies: Lies, Damned Lies and Statistics

Factor Analysis

Entropy Measure

Warranties: Statistical Planning and Analysis

Tests for Normality

Directional (i.e., circular) Data Analysis

Introduction

Decision making process under uncertainty is largely based on application of statistical data analysis for probabilistic risk assessment of your decision. Managers need to understand variation for two key reasons. First, so that they can lead others to apply statistical thinking in day to day activities and secondly, to apply the concept for the purpose of continuous improvement. This course will provide you with hands-on experience to promote the use of statistical thinking and techniques to apply them to make educated decisions whenever there is variation in business data. Therefore, it is a course in statistical thinking via a data-oriented approach.

Statistical models are currently used in various fields of business and science. However, the terminology differs from field to field. For example, the fitting of models to data, called calibration, history matching, and data assimilation, are all synonymous with parameter estimation.

Your organization database contains a wealth of information, yet the decision technology group members tap a fraction of it. Employees waste time scouring multiple sources for a database. The decision-makers are frustrated because they cannot get business-critical data exactly when they need it. Therefore, too many decisions are based on guesswork, not facts. Many opportunities are also missed, if they are even noticed at all.

Knowledge is what we know well. Information is the communication of knowledge. In every knowledge exchange, there is a sender and a receiver. The sender make common what is private, does the informing, the communicating. Information can be classified as explicit and tacit forms. The explicit information can be explained in structured form, while tacit information is inconsistent and fuzzy to explain. Know that data are only crude information and not knowledge by themselves.

Data is known to be crude information and not knowledge by itself. The sequence from data to knowledge is: from Data to Information, from Information to Facts, and finally, from Facts to Knowledge. Data becomes information, when it becomes relevant to your decision problem. Information becomes fact, when the data can support it. Facts are what the data reveals. However the decisive instrumental (i.e., applied) knowledge is expressed together with some statistical degree of confidence.

Fact becomes knowledge, when it is used in the successful completion of a decision process. Once you have a massive amount of facts integrated as knowledge, then your mind will be superhuman in the same sense that mankind with writing is superhuman compared to mankind before writing. The following figure illustrates the statistical thinking process based on data in constructing statistical models for decision making under uncertainties.

The above figure depicts the fact that as the exactness of a statistical model increases, the level of improvements in decision-making increases. That's why we need statistical data analysis. Statistical data analysis arose from the need to place knowledge on a systematic evidence base. This required a study of the laws of probability, the development of measures of data properties and relationships, and so on.

Statistical inference aims at determining whether any statistical significance can be attached that results after due allowance is made for any random variation as a source of error. Intelligent and critical inferences cannot be made by those who do not understand the purpose, the conditions, and applicability of the various techniques for judging significance.

Considering the uncertain environment, the chance that "good decisions" are made increases with the availability of "good information." The chance that "good information" is available increases with the level of structuring the process of Knowledge Management. The above figure also illustrates the fact that as the exactness of a statistical model increases, the level of improvements in decision-making increases.

Knowledge is more than knowing something technical. Knowledge needs wisdom. Wisdom is the power to put our time and our knowledge to the proper use. Wisdom comes with age and experience. Wisdom is the accurate application of accurate knowledge and its key component is to knowing the limits of your knowledge. Wisdom is about knowing how something technical can be best used to meet the needs of the decision-maker. Wisdom, for example, creates statistical software that is useful, rather than technically brilliant. For example, ever since the Web entered the popular consciousness, observers have noted that it puts information at your fingertips but tends to keep wisdom out of reach.

Almost every professionals need a statistical toolkit. Statistical skills enable you to intelligently collect, analyze and interpret data relevant to their decision-making. Statistical concepts enable us to solve problems in a diversity of contexts. Statistical thinking enables you to add substance to your decisions.

The appearance of computer software, JavaScript Applets, Statistical Demonstrations Applets, and Online Computation are the most important events in the process of teaching and learning concepts in model-based statistical decision making courses. These tools allow you to construct numerical examples to understand the concepts, and to find their significance for yourself.

We will apply the basic concepts and methods of statistics you've already learned in the previous statistics course to the real world problems. The course is tailored to meet your needs in the statistical business-data analysis using widely available commercial statistical computer packages such as SAS and SPSS. By doing this, you will inevitably find yourself asking questions about the data and the method proposed, and you will have the means at your disposal to settle these questions to your own satisfaction. Accordingly, all the applications problems are borrowed from business and economics. By the end of this course you'll be able to think statistically while performing any data analysis.

There are two general views of teaching/learning statistics: Greater and Lesser Statistics. Greater statistics is everything related to learning from data, from the first planning or collection, to the last presentation or report. Lesser statistics is the body of statistical methodology. This is a Greater Statistics course.

There are basically two kinds of "statistics" courses. The real kind shows you how to make sense out of data. These courses would include all the recent developments and all share a deep respect for data and truth. The imitation kind involves plugging numbers into statistics formulas. The emphasis is on doing the arithmetic correctly. These courses generally have no interest in data or truth, and the problems are generally arithmetic exercises. If a certain assumption is needed to justify a procedure, they will simply tell you to "assume the ... are normally distributed" -- no matter how unlikely that might be. It seems like you all are suffering from an overdose of the latter. This course will bring out the joy of statistics in you.

Statistics is a science assisting you to make decisions under uncertainties (based on some numerical and measurable scales). Decision making process must be based on data neither on personal opinion nor on belief.

It is already an accepted fact that "Statistical thinking will one day be as necessary for efficient citizenship as the ability to read and write." So, let us be ahead of our time.

Popular Distributions and Their Typical Applications

Binomial

Application: Gives probability of exactly successes in n independent trials, when probability of success p on single trial is a constant. Used frequently in quality control, reliability, survey sampling, and other industrial problems.Example: What is the probability of 7 or more "heads" in 10 tosses of a fair coin?

Comments: Can sometimes be approximated by normal or by Poisson distribution.

Multinomial

Application: Gives probability of exactly ni outcomes of event i, for i = 1, 2, ..., k in n independent trials when the probability pi of event i in a single trial is a constant. Used frequently in quality control and other industrial problems.Example: Four companies are bidding for each of three contracts, with specified success probabilities. What is the probability that a single company will receive all the orders?

Comments: Generalization of binomial distribution for ore than 2 outcomes.

Hypergeometric

Application: Gives probability of picking exactly x good units in a sample of n units from a population of N units when there are k bad units in the population. Used in quality control and related applications.Example: Given a lot with 21 good units and four defective. What is the probability that a sample of five will yield not more than one defective?

Comments: May be approximated by binomial distribution when n is small related to N.

Geometric

Application: Gives probability of requiring exactly x binomial trials before the first success is achieved. Used in quality control, reliability, and other industrial situations.Example: Determination of probability of requiring exactly five tests firings before first success is achieved.

Pascal

Application: Gives probability of exactly x failures preceding the sth success.Example: What is the probability that the third success takes place on the 10th trial?

Negative Binomial

Application: Gives probability similar to Poisson distribution when events do not occur at a constant rate and occurrence rate is a random variable that follows a gamma distribution.

Example: Distribution of number of cavities for a group of dental patients.

Comments: Generalization of Pascal distribution when s is not an integer. Many authors do not distinguish between Pascal and negative binomial distributions.

Poisson

Application: Gives probability of exactly x independent occurrences during a given period of time if events take place independently and at a constant rate. May also represent number of occurrences over constant areas or volumes. Used frequently in quality control, reliability, queuing theory, and so on.Example: Used to represent distribution of number of defects in a piece of material, customer arrivals, insurance claims, incoming telephone calls, alpha particles emitted, and so on.

Comments: Frequently used as approximation to binomial distribution.

Normal

Application: A basic distribution of statistics. Many applications arise from central limit theorem (average of values of n observations approaches normal distribution, irrespective of form of original distribution under quite general conditions). Consequently, appropriate model for many, but not all, physical phenomena.Example: Distribution of physical measurements on living organisms, intelligence test scores, product dimensions, average temperatures, and so on.

Comments: Many methods of statistical analysis presume normal distribution.

A so-called Generalized Gaussian distribution has the following pdf:

A.exp[-B|x|n], where A, B, n are constants. For n=1 and 2 it is Laplacian and Gaussian distribution respectively. This distribution approximates reasonably good data in some image coding application.

Slash distribution is the distribution of the ratio of a normal random variable to an independent uniform random variable, see Hutchinson T., Continuous Bivariate Distributions, Rumsby Sci. Publications, 1990.

Gamma

Application: A basic distribution of statistics for variables bounded at one side - for example x greater than or equal to zero. Gives distribution of time required for exactly k independent events to occur, assuming events take place at a constant rate. Used frequently in queuing theory, reliability, and other industrial applications.

Example: Distribution of time between re calibrations of instrument that needs re calibration after k uses; time between inventory restocking, time to failure for a system with standby components.

Comments: Erlangian, exponential, and chi- square distributions are special cases. The Dirichlet is a multidimensional extension of the Beta distribution.

Distribution of a product of iid uniform (0, 1) random? Like many problems with products, this becomes a familiar problem when turned into a problem about sums. If X is uniform (for simplicity of notation make it U(0,1)), Y=-log(X) is exponentially distributed, so the log of the product of X1, X2, ... Xn is the sum of Y1, Y2, ... Yn which has a gamma (scaled chi-square) distribution. Thus, it is a gamma density with shape parameter n and scale 1.

Exponential

Application: Gives distribution of time between independent events occurring at a constant rate. Equivalently, probability distribution of life, presuming constant conditional failure (or hazard) rate. Consequently, applicable in many, but not all reliability situations.Example: Distribution of time between arrival of particles at a counter. Also life distribution of complex nonredundant systems, and usage life of some components - in particular, when these are exposed to initial burn-in, and preventive maintenance eliminates parts before wear-out.

Comments: Special case of both Weibull and gamma distributions.

Beta

Application: A basic distribution of statistics for variables bounded at both sides - for example x between o and 1. Useful for both theoretical and applied problems in many areas.

Example: Distribution of proportion of population located between lowest and highest value in sample; distribution of daily per cent yield in a manufacturing process; description of elapsed times to task completion (PERT).

Comments: Uniform, right triangular, and parabolic distributions are special cases. To generate beta, generate two random values from a gamma, g1, g2. The ratio g1/(g1 +g2) is distributed like a beta distribution. The beta distribution can also be thought of as the distribution of X1 given (X1+X2), when X1 and X2 are independent gamma random variables.

There is also a relationship between the Beta and Normal distributions. The conventional calculation is that given a PERT Beta with highest value as b lowest as a and most likely as m, the equivalent normal distribution has a mean and mode of (a + 4M + b)/6 and a standard deviation of (b - a)/6.

See Section 4.2 of, Introduction to Probability by J. Laurie Snell (New York, Random House, 1987) for a link between beta and F distributions (with the advantage that tables are easy to find).

Uniform

Application: Gives probability that observation will occur within a particular interval when probability of occurrence within that interval is directly proportional to interval length.Example: Used to generate random valued.

Comments: Special case of beta distribution.

The density of geometric mean of n independent uniforms(0,1) is:

P(X=x) = n x(n-1) (Log[1/xn])(n-1) / (n-1)!.

zL = [UL-(1-U)L]/L is said to have Tukey's symmetrical l-distribution.

Log-normal

Application: Permits representation of random variable whose logarithm follows normal distribution. Model for a process arising from many small multiplicative errors. Appropriate when the value of an observed variable is a random proportion of the previously observed value.In the case where the data are lognormally distributed, the geometric mean acts as a better data descriptor than the mean. The more closely the data follow a lognormal distribution, the closer the geometric mean is to the median, since the log re-expression produces a symmetrical distribution.

Example: Distribution of sizes from a breakage process; distribution of income size, inheritances and bank deposits; distribution of various biological phenomena; life distribution of some transistor types.

The ratio of two log-normally distributed variables is

log-normal.

Rayleigh

Application: Gives distribution of radial error when the errors in two mutually perpendicular axes are independent and normally distributed around zero with equal variances.Example: Bomb-sighting problems; amplitude of noise envelope when a linear detector is used.

Comments: Special case of Weibull distribution.

Cauchy

Application: Gives distribution of ratio of two independent standardized normal variates.Example: Distribution of ratio of standardized noise readings; distribution of tan(x) when x is uniformly distributed.

Chi-square

The probability density curve of a chi-square distribution is asymmetric curve stretching over the positive side of the line and having a long right tail. The form of the curve depends on the value of the degrees of freedom.Applications: The most widely applications of Chi-square distribution are:

- Chi-square Test for Association is a (non-parametric, therefore can be used for nominal data) test of statistical significance widely used bivariate tabular association analysis. Typically, the hypothesis is whether or not two different populations are different enough in some characteristic or aspect of their behavior based on two random samples. This test procedure is also known as the Pearson chi-square test.

- Chi-square Goodness-of-fit Test is used to test if an observed distribution conforms to any particular distribution. Calculation of this goodness of fit test is by comparison of observed data with data expected based on the particular distribution.

Weibull

Application: General time-to-failure distribution due to wide diversity of hazard-rate curves, and extreme-value distribution for minimum of N values from distribution bounded at left.The Weibull distribution is often used to model "time until failure." In this manner, it is applied in actuarial science and in engineering work.

It is also an appropriate distribution for describing data corresponding to resonance behavior, such as the variation with energy of the cross section of a nuclear reaction or the variation with velocity of the absorption of radiation in the Mossbauer effect.

Example: Life distribution for some capacitors, ball bearings, relays, and so on.

Comments: Rayleigh and exponential distribution are special cases.

Extreme value

Application: Limiting model for the distribution of the maximum or minimum of N values selected from an "exponential-type" distribution, such as the normal, gamma, or exponential.Example: Distribution of breaking strength of some materials, capacitor breakdown voltage, gust velocities encountered by airplanes, bacteria extinction times.

t distributions

The t distributions were discovered in 1908 by William Gosset who was a chemist and a statistician employed by the Guinness brewing company. He considered himself a student still learning statistics, so that is how he signed his papers as pseudonym "Student". Or perhaps he used a pseudonym due to "trade secrets" restrictions by Guinness.Note that there are different t distributions, it is a class of distributions. When we speak of a specific t distribution, we have to specify the degrees of freedom. The t density curves are symmetric and bell-shaped like the normal distribution and have their peak at 0. However, the spread is more than that of the standard normal distribution. The larger the degrees of freedom, the closer the t-density is to the normal density.

Why Is Every Thing Priced One Penny Off the Dollar?

Here's a psychological answer. Due to a very limited data processing ability we humans rely heavily on categorization (e.g., seeing things as "black or white" requires just a binary coding scheme, as opposed to seeing the many shades of gray). Our number system has a major category of 100's (e.g., 100 pennies, 200 pennies, 300 pennies) and there is a affective response associated with these groups--more is better if you are getting them; more is bad if you are giving them. Advertising and pricing takes advantage of this limited data processing by $2.99, $3.95, etc. So that $2.99 carries the affective response associated with the 200 pennies group. Indeed, if you ask people to respond to "how close together" are 271 & 283 versus "how close together" are 291 & 303, the former are seen as closer (there's a lot of methodology set up to dissuade the subjects to just subtract the smaller from the larger). Similarly, prejudice, job promotions, competitive sports, and a host of other activates attempt to associate large qualitative differences with what are often minor quantitative differences, e.g., gold metal in Olympic swimming event may be milliseconds difference from no metal.Yet another motivation: Psychologically $9.99 might look better than $10.00, but there is a more basic reason too. The assistant has to give you change from your ten dollars, and has to ring the sale up through his/her cash register to get at the one cent. This forces the transaction to go through the books, you get a receipt, and the assistant can't just pocket the $10 him/herself. Mind you, there's nothing to stop a particularly untrustworthy employee going into work with a pocketful of cents...

There's sales tax for that. For either price (at least in the US), you'll have to pay sales tax too. So that solves the problem of opening the cash register. That, plus the security cameras ;).

There has been some research in marketing theory on the consumer's behavior at particular price points. Essentially, these are tied up with buyer expectations based on prior experience. A critical case study in UK on price pointing of pantyhose (tights) shown that there were distinct demand peaks at buyer anticipated price points of 59p, 79p, 99p, £1.29 and so on. Demand at intermediate price points was dramatically below these anticipated points for similar quality goods. In the UK, for example, prices of wine are usually set at key price points. The wine retailers also confirm that sales at different prices (even a penny or so different) does result in dramatically different sales volumes.

Other studies showed the opposite where reduced price showed reduced sales volumes, consumers ascribing quality in line with price. However, it is not fully tested to determine if sales volume continued to increase with price.

Other similar research turns on the behavior of consumers to variations in price. The key issue here is that there is a Just Noticeable Difference (JND) below which consumers will not act on a price increase. This has practical application when increasing charge rates and the like. The JND is typically 5% and this provides the opportunity for consultants etc to increase prices above prior rates by less than the JND without customer complaint. As an empirical experiment, try overcharging clients by 1, 2,.., 5, 6% and watch the reaction. Up to 5% there appears to be no negative impact.

Conversely, there is no point in offering a fee reduction of less than 5% as clients will not recognize the concession you have made. Equally, in periods of price inflation, price rises should be staged so that the individual price rise is kept under 5%, perhaps by raising prices by 4% twice per year rather than a one off 8% rise.

A Short History of Probability and Statistics

The original idea of "statistics" was the collection of information about and for the "state". The word statistics drives directly not from any classical Greek or Latin roots, but from the Italian word for state.The birth of statistics occurred in mid-17th century. A commoner, named John Graunt, who was a native of London, begin reviewing a weekly church publication issued by the local parish clerk that listed the number of births, christenings, and deaths in each parish. These so called Bills of Mortality also listed the causes of death. Graunt who was a shopkeeper organized this data in the forms we call descriptive statistics, which was published as Natural and Political Observation Made upon the Bills of Mortality. Shortly thereafter, he was elected as a member of Royal Society. Thus, statistics has to borrow some concepts from sociology, such as the concept of "Population". It has been argued that since statistics usually involves the study of human behavior, it cannot claim the precision of the physical sciences.

Probability has much longer history. Probability is derived from the verb to probe meaning to "find out" what is not too easily accessible or understandable. The word "proof" has the same origin that provides necessary details to understand what is claimed to be true.

Probability originated from the study of games of chance and gambling during the sixteenth century. Probability theory was a branch of mathematics studied by Blaise Pascal and Pierre de Fermat in the seventeenth century. Currently; in 21st century, probabilistic modeling are used to control the flow of traffic through a highway system, a telephone interchange, or a computer processor; find the genetic makeup of individuals or populations; quality control; insurance; investment; and other sectors of business and industry.

New and ever growing diverse fields of human activities are using statistics; however, it seems that this field itself remains obscure to the public. Professor Bradley Efron expressed this fact nicely:

Further Readings:

Daston L., Classical Probability in the Enlightenment, Princeton University Press, 1988.

The book points out that early Enlightenment thinkers could not face uncertainty. A mechanistic, deterministic machine, was the Enlightenment view of the world.

Gillies D., Philosophical Theories of Probability, Routledge, 2000. Covers the classical, logical, subjective, frequency, and propensity views.

Hacking I., The Emergence of Probability, Cambridge University Press, London, 1975. A philosophical study of early ideas about probability, induction and statistical inference.

Peters W., Counting for Something: Statistical Principles and Personalities, Springer, New York, 1987. It teaches the principles of applied economic and social statistics in a historical context. Featured topics include public opinion polls, industrial quality control, factor analysis, Bayesian methods, program evaluation, non-parametric and robust methods, and exploratory data analysis.

Porter T., The Rise of Statistical Thinking, 1820-1900, Princeton University Press, 1986.

The author states that statistics has become known in the twentieth century as the mathematical tool for analyzing experimental and observational data. Enshrined by public policy as the only reliable basis for judgments as the efficacy of medical procedures or the safety of chemicals, and adopted by business for such uses as industrial quality control, it is evidently among the products of science whose influence on public and private life has been most pervasive. Statistical analysis has also come to be seen in many scientific disciplines as indispensable for drawing reliable conclusions from empirical results.This new field of mathematics found so extensive a domain of applications.

Stigler S., The History of Statistics: The Measurement of Uncertainty Before 1900, U. of Chicago Press, 1990. It covers the people, ideas, and events underlying the birth and development of early statistics.

Tankard J., The Statistical Pioneers, Schenkman Books, New York, 1984.

This work provides the detailed lives and times of theorists whose work continues to shape much of the modern statistics.

Different Schools of Thought in Statistics

There are few different schools of thoughts in statistics. They are introduced sequentially in time by necessity.The Birth Process of a New School of Thought

The process of devising a new school of thought in any field has always taken a natural path. Birth of new schools of thought in statistics is not an exception. The birth process is outlined below:

Given an already established school, one must work within the defined framework.

A crisis appears, i.e., some inconsistencies in the framework result from its own laws.

Response behavior:

- Reluctance to consider the crisis.

- Try to accommodate and explain the crisis within the existing framework.

- Conversion of some well-known scientists attracts followers in the new school.

The perception of a crisis in statistical community calls forth demands for "foundation-strengthens". After the crisis is over, things may look different and historians of statistics may cast the event as one in a series of steps in "building upon a foundation". So we can read histories of statistics, as the story of a pyramid built up layer by layer on a firm base over time.

Other schools of thought are emerging to extend and "soften" the existing theory of probability and statistics. Some "softening" approaches utilize the concepts and techniques developed in the fuzzy set theory, the theory of possibility, and Dempster-Shafer theory.

The following Figure illustrates the three major schools of thought; namely, the Classical (attributed to Laplace), Relative Frequency (attributed to Fisher), and Bayesian (attributed to Savage). The arrows in this figure represent some of the main criticisms among Objective, Frequentist, and Subjective schools of thought. To which school do you belong? Read the conclusion in this figure.

What Type of Statistician Are You?

Click on the image to enlarge it

Further Readings:

Plato, Jan von, Creating Modern Probability, Cambridge University Press, 1994. This book provides a historical point of view on subjectivist and objectivist probability school of thoughts.

Press S., and J. Tanur, The Subjectivity

of Scientists and the Bayesian Approach, Wiley, 2001. Comparing and contrasting the reality of subjectivity in the work of history's great scientists and the modern Bayesian approach to statistical analysis.

Weatherson B., Begging the question and Bayesians, Studies in History and Philosophy of Science, 30(4), 687-697, 1999.

Bayesian, Frequentist, and Classical Methods

The problem with the Classical Approach is that what constitutes an outcome is not objectively determined. One person's simple event is another person's compound event. One researcher may ask, of a newly discovered planet, "what is the probability that life exists on the new planet?" while another may ask "what is the probability that carbon-based life exists on it?"Bruno de Finetti, in the introduction to his two-volume treatise on Bayesian ideas, clearly states that "Probabilities Do not Exist". By this he means that probabilities are not located in coins or dice; they are not characteristics of things like mass, density, etc.

Some Bayesian approaches consider probability theory as an extension of deductive logic (including dialogue logic, interrogative logic, informal logic, and artificial intelligence) to handle uncertainty. It purports to deduce from first principles the uniquely correct way of representing your beliefs about the state of things, and updating them in the light of the evidence. The laws of probability have the same status as the laws of logic. These Bayesian approaches are explicitly "subjective" in the sense that they deal with the plausibility which a rational agent ought to attach to the propositions he/she considers, "given his/her current state of knowledge and experience." By contrast, at least some non-Bayesian approaches consider probabilities as "objective" attributes of things (or situations) which are really out there (availability of data).

A Bayesian and a classical statistician analyzing the same data will generally reach the same conclusion. However, the Bayesian is better able to quantify the true uncertainty in his analysis, particularly when substantial prior information is available. Bayesians are willing to assign probability distribution function(s) to the population's parameter(s) while frequentists are not.

From a scientist's perspective, there are good grounds to reject Bayesian reasoning. The problem is that Bayesian reasoning deals not with objective, but subjective probabilities. The result is that any reasoning using a Bayesian approach cannot be publicly checked -- something that makes it, in effect, worthless to science, like non replicative experiments.

Bayesian perspectives often shed a helpful light on classical procedures. It is necessary to go into a Bayesian framework to give confidence intervals the probabilistic interpretation which practitioners often want to place on them. This insight is helpful in drawing attention to the point that another prior distribution would lead to a different interval.

A Bayesian may cheat by basing the prior distribution on the data; a Frequentist can base the hypothesis to be tested on the data. For example, the role of a protocol in clinical trials is to prevent this from happening by requiring the hypothesis to be specified before the data are collected. In the same way, a Bayesian could be obliged to specify the prior in a public protocol before beginning a study. In a collective scientific study, this would be somewhat more complex than for Frequentist hypotheses because priors must be personal for coherence to hold.

A suitable quantity that has been proposed to measure inferential uncertainty; i.e., to handle the a priori unexpected, is the likelihood function itself.

If you perform a series of identical random experiments (e.g., coin tosses), the underlying probability distribution that maximizes the probability of the outcome you observed is the probability distribution proportional to the results of the experiment.

This has the direct interpretation of telling how (relatively) well each possible explanation (model), whether obtained from the data or not, predicts the observed data. If the data happen to be extreme ("atypical") in some way, so that the likelihood points to a poor set of models, this will soon be picked up in the next rounds of scientific investigation by the scientific community. No long run frequency guarantee nor personal opinions are required.

There is a sense in which the Bayesian approach is oriented toward making decisions and the frequentist hypothesis testing approach is oriented toward science. For example, there may not be enough evidence to show scientifically that agent X is harmful to human beings, but one may be justified in deciding to avoid it in one's diet.

In almost all cases, a point estimate is a continuous random variable. Therefore, the probability that the probability is any specific point estimate is really zero. This means that in a vacuum of information, we can make no guess about the probability. Even if we have information, we can really only guess at a range for the probability.

Therefore, in estimating a parameter of a given population, it is necessary that a point estimate accompanied by some measure of possible error of the estimate. The widely acceptable approach is that a point estimate must be accompanied by some interval about the estimate with some measure of assurance that this interval contains the true value of the population parameter. For example, the reliability assurance processes in manufacturing industries are based on data driven information for making product-design decisions.

Objective Bayesian: There is a clear connection between probability and logic: both appear to tell us how we should reason. But how, exactly, are the two concepts related? Objective Bayesians offers one answer to this question. According to objective Bayesians, probability generalizes deductive logic: deductive logic tells us which conclusions are certain, given a set of premises, while probability tells us the extent to which one should believe a conclusion, given the premises certain conclusions being awarded full degree of belief. According to objective Bayesians, the premises objectively (i.e. uniquely) determine the degree to which one should believe a conclusion.

Further Readings:

Bernardo J., and A. Smith, Bayesian Theory, Wiley, 2000.

Congdon P., Bayesian Statistical Modelling, Wiley, 2001.

Corfield D., and J. Williamson, Foundations of Bayesianism, Kluwer Academic Publishers, 2001. Contains

Logic, Mathematics, Decision Theory, and Criticisms of Bayesianism.

Land F., Operational Subjective Statistical Methods, Wiley, 1996. Presents a systematic treatment of subjectivist methods along with a good discussion of the historical and philosophical backgrounds of the major approaches to probability and statistics.

Press S., Subjective and Objective Bayesian Statistics: Principles, Models, and Applications, Wiley, 2002.

Zimmerman H., Fuzzy Set Theory, Kluwer Academic Publishers, 1991. Fuzzy logic approaches to probability (based on L.A. Zadeh and his followers) present a difference between "possibility

theory" and probability theory.

Rumor, Belief, Opinion, and Fact

As a necessity the human rational strategic thinking has evolved to cope with his/her environment. The rational strategic thinking which we call reasoning is another means to make the world calculable, predictable, and more manageable for the utilitarian purposes. In constructing a model of reality, factual information is therefore needed to initiate any rational strategic thinking in the form of reasoning. However, we should not confuse facts with beliefs, opinions, or rumors. The following table helps to clarify the distinctions:

Rumor

Belief

Opinion

Fact

One says to oneself

I need to use it anyway

This is the truth. I'm right

This is my view

This is a fact

One says to others

It could be true. You know!

You're wrong

That is yours

I can explain it to you

Beliefs are defined as someone's own understanding. In belief, "I am" always right and "you" are wrong. There is nothing that can be done to convince the person that what they believe is wrong.

With respect to belief, Henri Poincaré said, "Doubt everything or believe everything: these are two equally convenient strategies. With either, we dispense with the need to think." Believing means not wanting to know what is fact. Human beings are most apt to believe what they least understand. Therefore, you may rather have a mind opened by wonder than one closed by belief. The greatest derangement of the mind is to believe in something because one wishes it to be so.

The history of mankind is filled with unsettling normative perspectives reflected in, for example, inquisitions, witch hunts, denunciations, and brainwashing techniques. The "sacred beliefs" are not only within religion, but also within ideologies, and could even include science. In much the same way many scientists trying to "save the theory." For example, the Freudian treatment is a kind of brainwashing by the therapist where the patient is in a suggestive mood completely and religiously believing in whatever the therapist is making of him/her and blaming himself/herself in all cases. There is this huge lumbering momentum from the Cold War where thinking is still not appreciated. Nothing is so firmly believed as that which is least known.

The history of humanity is also littered with discarded belief-models. However, this does not mean that someone who didn't understand what was going on invented the model nor had no utility or practical value. The main idea was the cultural values of any wrong model. The falseness of a belief is not necessarily an objection to a belief. The question is, to what extent is it life-promoting, and life enhancing for the believer?

Opinions (or feelings) are slightly less extreme than beliefs however, they are dogmatic. An opinion means that a person has certain views that they think are right. Also, they know that others are entitled to their own opinions. People respect others' opinions and in turn expect the same. In forming one's opinion, the empirical observations are obviously strongly affected by attitude and perception. However, opinions that are well rooted should grow and change like a healthy tree. Fact is the only instructional material that can be presented in an entirely non-dogmatic way. Everyone has a right to his/her own opinion, but no one has a right to be wrong in his/her facts.

Public opinion is often a sort of religion, with the majority as its prophet. Moreover, the profit has a short memory and does not provide consistent opinions over time.

Rumors and gossip are even weaker than opinion. Now the question is who will believe these? For example, rumors and gossip about a person are those when you hear something you like, about someone you do not. Here is an example you might be familiar with: Why is there no Nobel Prize for mathematics? It is the opinion of many that Alfred Nobel caught his wife in an amorous situation with Mittag-Leffler, the foremost Swedish mathematician at the time. Therefore, Nobel was afraid that if he were to establish a mathematics prize, the first to get it would be M-L. The story persists, no matter how often one repeats the plain fact that Nobel was not married.

To understand the difference between feeling and strategic thinking , consider carefully the following true statement: He that thinks himself the happiest man really is so; but he that thinks himself the wisest is generally the greatest fool. Most people do not ask for facts in making up their decisions. They would rather have one good, soul-satisfying emotion than a dozen facts. This does not mean that you should not feel anything. Notice your feelings. But do not think with them.

Facts are different than beliefs, rumors, and opinions. Facts are the basis of decisions. A fact is something that is right and one can prove to be true based on evidence and logical arguments. A fact can be used to convince yourself, your friends, and your enemies. Facts are always subject to change. Data becomes information when it becomes relevant to your decision problem. Information becomes fact when the data can support it. Fact becomes knowledge when it is used in the successful completion of a structured decision process. However, a fact becomes an opinion if it allows for different interpretations, i.e., different perspectives. Note that what happened in the past is fact, not truth. Truth is what we think about, what happened (i.e., a model).

Business Statistics is built up with facts, as a house is with stones. But a collection of facts is no more a useful and instrumental science for the manager than a heap of stones is a house.

Science and religion are profoundly different. Religion asks us to believe without question, even (or especially) in the absence of hard evidence. Indeed, this is essential for having a faith. Science asks us to take nothing on faith, to be wary of our penchant for self-deception, to reject anecdotal evidence. Science considers deep but healthy skepticism a prime feature. One of the reasons for its success is that science has built-in, error-correcting machinery at its very heart.

Learn how to approach information critically and discriminate in a principled way between beliefs, opinions, and facts. Critical thinking is needed to produce well-reasoned representation of reality in your modeling process. Analytical thinking demands clarity, consistency, evidence, and above all, a consecutive, focused-thinking.

Further Readings:

Boudon R., The Origin of Values: Sociology and Philosophy of Belief, Transaction Publishers, London, 2001.

Castaneda C., The Active Side of Infinity, Harperperennial Library, 2000.

Goodwin P., and G. Wright,

Decision Analysis for Management Judgment, Wiley, 1998.

Jurjevich R., The Hoax of Freudism: A Study of Brainwashing the American Professionals and Laymen, Philadelphia, Dorrance, 1974.

Kaufmann W., Religions in Four Dimensions: Existential and Aesthetic, Historical and

Comparative, Reader's Digest Press, 1976.

What is Statistical Data Analysis? Data are not Information!

Data are not information! To determine what statistical data analysis is, one must first define statistics. Statistics is a set of methods that are used to collect, analyze, present, and interpret data. Statistical methods are used in a wide variety of occupations and help people identify, study, and solve many complex problems. In the business and economic world, these methods enable decision makers and managers to make informed and better decisions about uncertain situations.Vast amounts of statistical information are available in today's global and economic environment because of continual improvements in computer technology. To compete successfully globally, managers and decision makers must be able to understand the information and use it effectively. Statistical data analysis provides hands on experience to promote the use of statistical thinking and techniques to apply in order to make educated decisions in the business world.

Computers play a very important role in statistical data analysis. The statistical software package, SPSS, which is used in this course, offers extensive data-handling capabilities and numerous statistical analysis routines that can analyze small to very large data statistics. The computer will assist in the summarization of data, but statistical data analysis focuses on the interpretation of the output to make inferences and predictions.

Studying a problem through the use of statistical data analysis usually involves four basic steps.

1. Defining the problem

2. Collecting the data

3. Analyzing the data

4. Reporting the results

Defining the Problem

An exact definition of the problem is imperative in order to obtain accurate data about it. It is extremely difficult to gather data without a clear definition of the problem.

Collecting the Data

Designing ways to collect data is an important job in statistical data analysis. Two important aspects of a statistical study are:

Population - a set of all the elements of interest in a study

Sample - a subset of the population

Statistical inference is refer to extending your knowledge obtain from a random sample from a population to the whole population. This is known in mathematics as an Inductive Reasoning. That is, knowledge of whole from a particular. Its main application is in hypotheses testing about a given population.

The purpose of statistical inference is to obtain information about a population form information contained in a sample. It is just not feasible to test the entire population, so a sample is the only realistic way to obtain data because of the time and cost constraints. Data can be either quantitative or qualitative. Qualitative data are labels or names used to identify an attribute of each element. Quantitative data are always numeric and indicate either how much or how many.

For the purpose of statistical data analysis, distinguishing between cross-sectional and time series data is important. Cross-sectional data re data collected at the same or approximately the same point in time. Time series data are data collected over several time periods.

Data can be collected from existing sources or obtained through observation and experimental studies designed to obtain new data. In an experimental study, the variable of interest is identified. Then one or more factors in the study are controlled so that data can be obtained about how the factors influence the variables. In observational studies, no attempt is made to control or influence the variables of interest. A survey is perhaps the most common type of observational study.

Analyzing the Data

Statistical data analysis divides the methods for analyzing data into two categories: exploratory methods and confirmatory methods. Exploratory methods are used to discover what the data seems to be saying by using simple arithmetic and easy-to-draw pictures to summarize data. Confirmatory methods use ideas from probability theory in the attempt to answer specific questions. Probability is important in decision making because it provides a mechanism for measuring, expressing, and analyzing the uncertainties associated with future events. The majority of the topics addressed in this course fall under this heading.

Reporting the Results

Through inferences, an estimate or test claims about the characteristics of a population can be obtained from a sample. The results may be reported in the form of a table, a graph or a set of percentages. Because only a small collection (sample) has been examined and not an entire population, the reported results must reflect the uncertainty through the use of probability statements and intervals of values.

To conclude, a critical aspect of managing any organization is planning for the future. Good judgment, intuition, and an awareness of the state of the economy may give a manager a rough idea or "feeling" of what is likely to happen in the future. However, converting that feeling into a number that can be used effectively is difficult. Statistical data analysis helps managers forecast and predict future aspects of a business operation. The most successful managers and decision makers are the ones who can understand the information and use it effectively.

visit also Different Approaches to Statistical Thinking

Data Processing: Coding, Typing, and Editing

Data are often recorded manually on data sheets. Unless the numbers of observations and variables are small the data must be analyzed on a computer. The data will then go through three stages:Coding: the data are transferred, if necessary to coded sheets.

Typing: the data are typed and stored by at least two independent data entry persons. For example, when the Current Population Survey and other monthly surveys were taken using paper questionnaires, the U.S. Census Bureau used double key data entry.

Editing: the data are checked by comparing the two independent typed data. The standard practice for key-entering data from paper questionnaires is to key in all the data twice. Ideally, the second time should be done by a different key entry operator whose job specifically includes verifying mismatches between the original and second entries. It is believed that this "double-key/verification" method produces a 99.8% accuracy rate for total keystrokes.

Types of error: Recording error, typing error, transcription error (incorrect copying), Inversion (e.g., 123.45 is typed as 123.54), Repetition (when a number is repeated), Deliberate error.

Type of Data and Levels of Measurement

Information can be collected in statistics using qualitative or quantitative data.Qualitative data, such as eye color of a group of individuals, is not computable by arithmetic relations. They are labels that advise in which category or class an individual, object, or process fall. They are called categorical variables.

Quantitative data sets consist of measures that take numerical values for which descriptions such as means and standard deviations are meaningful. They can be put into an order and further divided into two groups: discrete data or continuous data. Discrete data are countable data, for example, the number of defective items produced during a day's production. Continuous data, when the parameters (variables) are measurable, are expressed on a continuous scale. For example, measuring the height of a person.

The first activity in statistics is to measure or count. Measurement/counting theory is concerned with the connection between data and reality. A set of data is a representation (i.e., a model) of the reality based on a numerical and mensurable scales. Data are called "primary type" data if the analyst has been involved in collecting the data relevant to his/her investigation. Otherwise, it is called "secondary type" data.

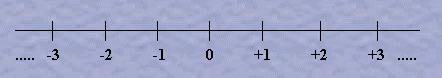

Data come in the forms of Nominal, Ordinal, Interval and Ratio (remember the French word NOIR for color black). Data can be either continuous or discrete.

Both zero and unit of measurements are arbitrary in the Interval scale. While the unit of measurement is arbitrary in Ratio scale, its zero point is a natural attribute. The categorical variable is measured on an ordinal or nominal scale.

Measurement theory is concerned with the connection between data and reality. Both statistical theory and measurement theory are necessary to make inferences about reality.

Since statisticians live for precision, they prefer Interval/Ratio levels of measurement.

Problems with Stepwise Variable Selection

Here are some of the common problems with stepwise variable selection in regression analysis.

- It yields R-squared values that are badly biased high.

- The F and chi-squared tests quoted next to each variable on the printout do not have the claimed distribution.

- The method yields confidence intervals for effects and predicted values that are falsely narrow.

- It yields P-values that do not have the proper meaning and the proper correction for them is a very difficult problem

- It gives biased regression coefficients that need shrinkage, i.e., the coefficients for remaining variables are too large.

- It has severe problems in the presence of collinearity.

- It is based on methods (e.g. F-tests for nested models) that were intended to be used to test pre-specified hypotheses.

- Increasing the sample size does not help very much.

Note also that the all-possible-subsets approach does not remove any of the above problems.

Further Reading:

Derksen, S. and H. Keselman, Backward, forward and stepwise automated subset selection algorithms, British Journal of Mathematical and Statistical Psychology, 45, 265-282, 1992.

An Alternative Approach for Estimating a Regression Line

The following approach is the so-called "distribution-free method" for estimating parameters in a simple regression y = mx + b:- Rewrite y = mx + b as b = -xm + y.

- Every data point (xi, yi) corresponds to a line b = -xi m + yi in the Cartesian coordinates plane (m, b), and an estimate of m and b can be obtained from the intersection of pairs of such lines. There are at most n(n+1)/2 such estimates.

- Take the medians to get the final estimates.

Further Readings:

Cornish-Bowden A., Analysis of Enzyme Kinetic Data, Oxford Univ Press, 1995.

Hald A., A History of Mathematical Statistics: From 1750 to 1930, Wiley, New York, 1998. Among others, the author points out that in the beginning of 18-th Century researches had four different methods to solve fitting problems: The Mayer-Laplace method of averages, The Boscovich-Laplace method of least absolute deviations, Laplace method of minimizing the largest absolute residual and the Legendre method of minimizing the sum of squared residuals. The only single way of choosing between these methods was: to compare results of estimates and residuals.

Multivariate Data Analysis

Data are easy to collect; what we really need in complex problem solving is information. We may view a data base as a domain that requires probes and tools to extract relevant information. As in the measurement process itself, appropriate instruments of reasoning must be applied to the data interpretation task. Effective tools serve in two capacities: to summarize the data and to assist in interpretation. The objectives of interpretive aids are to reveal the data at several levels of detail.Exploring the fuzzy data picture sometimes requires a wide-angle lens to view its totality. At other times it requires a closeup lens to focus on fine detail. The graphically based tools that we use provide this flexibility. Most chemical systems are complex because they involve many variables and there are many interactions among the variables. Therefore, chemometric techniques rely upon multivariate statistical and mathematical tools to uncover interactions and reduce the dimensionality of the data.

Multivariate analysis is a branch of statistics involving the consideration of objects on each of which are observed the values of a number of variables. Multivariate techniques are used across the whole range of fields of statistical application: in medicine, physical and biological sciences, economics and social science, and of course in many industrial and commercial applications.

Principal component analysis used for exploring data to reduce the dimension. Generally, PCA seeks to represent n correlated random variables by a reduced set of uncorrelated variables, which are obtained by transformation of the original set onto an appropriate subspace. The uncorrelated variables are chosen to be good linear combination of the original variables, in terms of explaining maximal variance, orthogonal directions in the data. Two closely related techniques, principal component analysis and factor analysis, are used to reduce the dimensionality of multivariate data. In these techniques correlations and interactions among the variables are summarized in terms of a small number of underlying factors. The methods rapidly identify key variables or groups of variables that control the system under study. The resulting dimension reduction also permits graphical representation of the data so that significant relationships among observations or samples can be identified.

Other techniques include Multidimensional Scaling, Cluster Analysis, and Correspondence Analysis.

Further Readings:

Chatfield C., and A. Collins, Introduction to Multivariate Analysis, Chapman and Hall, 1980.

Hoyle R., Statistical Strategies for small Sample Research, Thousand Oaks, CA, Sage, 1999.

Krzanowski W., Principles of Multivariate Analysis: A User's Perspective, Clarendon Press, 1988.

Mardia K., J. Kent and J. Bibby, Multivariate Analysis, Academic Press, 1979.

The Meaning and Interpretation of P-values (what the data say?)

The P-value, which directly depends on a given sample, attempts to provide a measure of the strength of the results of a test, in contrast to a simple reject or do not reject. If the null hypothesis is true and the chance of random variation is the only reason for sample differences, then the P-value is a quantitative measure to feed into the decision making process as evidence. The following table provides a reasonable interpretation of P-values:

| P< 0.01 | very strong evidence against H0 |

| 0.01£ P < 0.05 | moderate evidence against H0 |

| 0.05£ P < 0.10 | suggestive evidence against H0 |

| 0.10£ P | little or no real evidence against H0 |

This interpretation is widely accepted, and many scientific journals routinely publish papers using this interpretation for the result of test of hypothesis.

For the fixed-sample size, when the number of realizations is decided in advance, the distribution of p is uniform (assuming the null hypothesis). We would express this as P(p £ x) = x. That means the criterion of p <0.05 achieves a of 0.05.

When a p-value is associated with a set of data, it is a measure of the probability that the data could have arisen as a random sample from some population described by the statistical (testing) model.

A p-value is a measure of how much evidence you have against the null hypothesis. The smaller the p-value, the more evidence you have. One may combine the p-value with the significance level to make decision on a given test of hypothesis. In such a case, if the p-value is less than some threshold (usually .05, sometimes a bit larger like 0.1 or a bit smaller like .01) then you reject the null hypothesis.

Understand that the distribution of p-values under null hypothesis H0 is uniform, and thus does not depend on a particular form of the statistical test. In a statistical hypothesis test, the P value is the probability of observing a test statistic at least as extreme as the value actually observed, assuming that the null hypothesis is true. The value of p is defined with respect to a distribution. Therefore, we could call it "model-distributional hypothesis" rather than "the null hypothesis".

In short, it simply means that if the null had been true, the p value is the probability against the null in that case. The p-value is determined by the observed value, however, this makes it difficult to even state the inverse of p.

You may like using The P-values for the Popular Distributions Java applet.

Further Readings:

Arsham H., Kuiper's P-value as a Measuring Tool and Decision Procedure for the Goodness-of-fit Test, Journal of Applied Statistics, Vol. 15, No.3, 131-135, 1988.

Accuracy, Precision, Robustness, and Quality

Accuracy refers to the closeness of the measurements to the "actual" or "real" value of the physical quantity, whereas the term precision is used to indicate the closeness with which the measurements agree with one another quite independently of any systematic error involved. Therefore, an "accurate" estimate has small bias. A "precise" estimate has both small bias and variance. Quality is proportion to the inverse of variance.The robustness of a procedure is the extent to which its properties do not depend on those assumptions which you do not wish to make. This is a modification of Box's original version, and this includes Bayesian considerations, loss as well as prior. The central limit theorem (CLT) and the Gauss-Markov Theorem qualify as robustness theorems, but the Huber-Hempel definition does not qualify as a robustness theorem.

We must always distinguish between bias robustness and efficiency robustness. It seems obvious to me that no statistical procedure can be robust in all senses. One needs to be more specific about what the procedure must be protected against. If the sample mean is sometimes seen as a robust estimator, it is because the CLT guarantees a 0 bias for large samples regardless of the underlying distribution. This estimator is bias robust, but it is clearly not efficiency robust as its variance can increase endlessly. That variance can even be infinite if the underlying distribution is Cauchy or Pareto with a large scale parameter. This is the reason for which the sample mean lacks robustness according to Huber-Hampel definition. The problem is that the M-estimator advocated by Huber, Hampel and a couple of other folks is bias robust only if the underlying distribution is symmetric.

In the context of survey sampling, two types of statistical inferences are available: the model-based inference and the design-based inference which exploits only the randomization entailed by the sampling process (no assumption needed about the model). Unbiased design-based estimators are usually referred to as robust estimators because the unbiasedness is true for all possible distributions. It seems clear however, that these estimators can still be of poor quality as the variance that can be unduly large.

However, others people will use the word in other (imprecise) ways. Kendall's Vol. 2, Advanced Theory of Statistics, also cites Box, 1953; and he makes a less useful statement about assumptions. In addition, Kendall states in one place that robustness means (merely) that the test size, a, remains constant under different conditions. This is what people are using, apparently, when they claim that two-tailed t-tests are "robust" even when variances and sample sizes are unequal. I, personally, do not like to call the tests robust when the two versions of the t-test, which are approximately equally robust, may have 90% different results when you compare which samples fall into the rejection interval (or region).

I find it easier to use the phrase, "There is a robust difference", which means that the same finding comes up no matter how you perform the test, what (justifiable) transformation you use, where you split the scores to test on dichotomies, etc., or what outside influences you hold constant as covariates.

Influence Function and Its Applications

The influence function of an estimate at the point x is essentially the change in the estimate when an infinitesimal observation is added at the point x, divided by the mass of the observation. The influence function gives the infinitesimal sensitivity of the solution to the addition of a new datum.It is main potential application of the influence function is in comparison of methods of estimation for ranking the robustness. A commonsense form of influence function is the robust procedures when the extreme values are dropped, i.e., data trimming.

There are a few fundamental statistical tests such as test for randomness, test for homogeneity of population, test for detecting outliner(s), and then test for normality. For all these necessary tests there are powerful procedures in statistical data analysis literatures. Moreover since the authors are limiting their presentation to the test of mean, they can invoke the CLT for, say any sample of size over 30.

The concept of influence is the study of the impact on the conclusions and inferences on various fields of studies including statistical data analysis. This is possible by a perturbation analysis. For example, the influence function of an estimate is the change in the estimate when an infinitesimal change in a single observation divided by the amount of the change. It acts as the sensitivity analysis of the estimate.

The influence function has been extended to the "what-if" analysis, robustness, and scenarios analysis, such as adding or deleting an observation, outliners(s) impact, and so on. For example, for a given distribution both normal or otherwise, for which population parameters have been estimated from samples, the confidence interval for estimates of the median or mean is smaller than for those values that tend towards the extremities such as the 90% or 10% data. While in estimating the mean on can invoke the central limit theorem for any sample of size over, say 30. However, we cannot be sure that the calculated variance is the true variance of the population and therefore greater uncertainty creeps in and one need to sue the influence function as a measuring tool an decision procedure.

Further Readings:

Melnikov Y., Influence Functions and Matrices, Dekker, 1999.

What is Imprecise Probability?

Imprecise probability is a generic term for the many mathematical models that measure chance or uncertainty without sharp numerical probabilities. These models include belief functions, capacities' theory, comparative probability orderings, convex sets of probability measures, fuzzy measures, interval-valued probabilities, possibility measures, plausibility measures, and upper and lower expectations or previsions. Such models are needed in inference problems where the relevant information is scarce, vague or conflicting, and in decision problems where preferences may also be incomplete.What is a Meta-Analysis?

A Meta-analysis deals with a set of RESULTs to give an overall RESULT that is comprehensive and valid.a) Especially when Effect-sizes are rather small, the hope is that one can gain good power by essentially pretending to have the larger N as a valid, combined sample.

b) When effect sizes are rather large, then the extra POWER is not needed for main effects of design: Instead, it theoretically could be possible to look at contrasts between the slight variations in the studies themselves.

For example, to compare two effect sizes (r) obtained by two separate studies, you may use:

Z = (z1 - z2)/[(1/n1-3) + (1/n2-3)]1/2

where z1 and z2 are Fisher transformations of r, and the two ni's in the denominator represent the sample size for each study.

If you really trust that "all things being equal" will hold up. The typical "meta" study does not do the tests for homogeneity that should be required

In other words:

1. there is a body of research/data literature that you would like to summarize

2. one gathers together all the admissible examples of this literature (note: some might be discarded for various reasons)

3. certain details of each investigation are deciphered ... most important would be the effect that has or has not been found, i.e., how much larger in sd units is the treatment group's performance compared to one or more controls.

4. call the values in each of the investigations in #3 .. mini effect sizes.

5. across all admissible data sets, you attempt to summarize the overall effect size by forming a set of individual effects ... and using an overall sd as the divisor .. thus yielding essentially an average effect size.

6. in the meta analysis literature ... sometimes these effect sizes are further labeled as small, medium, or large ....

You can look at effect sizes in many different ways .. across different factors and variables. but, in a nutshell, this is what is done.

I recall a case in physics, in which, after a phenomenon had been observed in air, emulsion data were examined. The theory would have about a 9% effect in emulsion, and behold, the published data gave 15%. As it happens, there was no significant difference (practical, not statistical) in the theory, and also no error in the data. It was just that the results of experiments in which nothing statistically significant was found were not reported.

This non-reporting of such experiments, and often of the specific results which were not statistically significant, which introduces major biases. This is also combined with the totally erroneous attitude of researchers that statistically significant results are the important ones, and than if there is no significance, the effect was not important. We really need to differentiate between the term "statistically significant", and the usual word significant.

Meta-analysis is a controversial type of literature review in which the results of individual randomized controlled studies are pooled together to try to get an estimate of the effect of the intervention being studied. It increases statistical power and is used to resolve the problem of reports which disagree with each other. It's not easy to do well and there are many inherent problems.

Further Readings:

Lipsey M., and D. Wilson, Practical Meta-Analysis, Sage Publications, 2000.

What Is the Effect Size

Effect size (ES) is a ratio of a mean difference to a standard deviation, i.e. it is a form of z-score. Suppose an experimental treatment group has a mean score of Xe and a control group has a mean score of Xc and a standard deviation of Sc, then the effect size is equal to (Xe - Xc)/ScEffect size permits the comparative effect of different treatments to be compared, even when based on different samples and different measuring instruments.

Therefore, the ES is the mean difference between the control group and the treatment group. Howevere, by Glass's method, ES is (mean1 - mean2)/SD of control group while by Hunter-Schmit's method, ES is (mean1 - mean2)/pooled SD and then adjusted by instrument reliability coefficient. ES is commonly used in meta-analysis and power analysis.

Further Readings:

Cooper H., and L. Hedges, The Handbook of Research Synthesis, NY, Russell Sage, 1994.

Lipsey M., and D. Wilson, Practical Meta-Analysis, Sage Publications, 2000.

What is the Benford's Law? What About the Zipf's Law?

What is the Benford's Law: Benford's Law states that if we randomly select a number from a table of physical constants or statistical data, the probability that the first digit will be a "1" is about 0.301, rather than 0.1 as we might expect if all digits were equally likely. In general, the "law" says that the probability of the first digit being a "d" is:

This implies that a number in a table of physical constants is more likely to begin with a smaller digit than a larger digit. This can be observed, for instance, by examining tables of Logarithms and noting that the first pages are much more worn and smudged than later pages.

Bias Reduction Techniques

The most effective tools for bias reduction is non-biased estimators are the Bootstrap and the Jackknifing.According to legend, Baron Munchausen saved himself from drowning in quicksand by pulling himself up using only his bootstraps. The statistical bootstrap, which uses resampling from a given set of data to mimic the variability that produced the data in the first place, has a rather more dependable theoretical basis and can be a highly effective procedure for estimation of error quantities in statistical problems.

Bootstrap is to create a virtual population by duplicating the same sample over and over, and then re-samples from the virtual population to form a reference set. Then you compare your original sample with the reference set to get the exact p-value. Very often, a certain structure is "assumed" so that a residual is computed for each case. What is then re-sampled is from the set of residuals, which are then added to those assumed structures, before some statistic is evaluated. The purpose is often to estimate a P-level.

Jackknife is to re-compute the data by leaving on observation out each time. Leave-one-out replication gives you the same Case-estimates, I think, as the proper jack-knife estimation. Jackknifing does a bit of logical folding (whence, 'jackknife' -- look it up) to provide estimators of coefficients and error that (you hope) will have reduced bias.

Bias reduction techniques have wide applications in anthropology, chemistry, climatology, clinical trials, cybernetics, and ecology.

Further Readings:

Efron B., The Jackknife, The Bootstrap and Other Resampling Plans, SIAM, Philadelphia, 1982.

Efron B., and R. Tibshirani, An Introduction to the Bootstrap, Chapman & Hall (now the CRC Press), 1994.

Shao J., and D. Tu, The Jackknife and Bootstrap, Springer Verlag, 1995.

Area Under Standard Normal Curve